Combining Machine Learning with Credit Risk Scorecards

Here's how to get the full power of machine learning without losing the transparency that’s important from credit risk scorecards

With all the benefits of artificial intelligence, many of our customers are wanting to leverage machine learning to improve other types of analytic models already in use, such as credit risk assessment. With 30 years of experience with AI and machine learning under our belt, we can certainly help.

I have blogged about how we use AI to build credit risk models. In this post, I’d like to drill into one of the examples, to discuss an approach that provides a way to harness the full power of machine learning in the credit risk arena for those who are still on the fence and continuing to use scorecard models. It is also noteworthy that in the intervening years, FICO has made tremendous progress in making AI models explainable, constrained and engineerable and able to withstand regulatory scrutiny, thus making AI models suitable for credit risk models. These have been discussed in other blog articles. .

How Do You Build a Model with Limited Data?

A traditional credit risk scorecard model generates a score reflecting probability of default, using various customer characteristics as inputs to the model. These characteristics could be any customer information that is deemed relevant for assessing the probability of default, provided the information is also allowed by regulations. The input is binned into different value ranges and each of these bins is assigned a score weight. While scoring an individual, the score weights corresponding to the individual’s information are added up to produce the score.

While building a scorecard model, we need to “bin” the characteristics into value ranges, and the bins are meant to maximize the separation between known good cases and known bad cases. This separation is measured using weight of evidence (WoE), a logarithmic ratio of fraction of good cases and fraction of bad cases present in the bin. A WoE of 0 means that the bin has same distribution of good and bad cases as the overall population. The further away this value is from 0, the more concentration the bin has of one type of case versus the other, as compared to the overall population. A scorecard will generally have a few bins, with a smooth distribution of WoE.

As I described in my post, our project was to build credit risk models for a home equity portfolio. Home equity lending slowed dramatically after the recession, and due to this we had few bad exemplars in the development sample, and only a 0.2% default rate. It was difficult to build models using traditional scorecard techniques.

The primary reason for this is the inability of a scorecard model to interpolate information. Information needs to be explicitly provided to the scorecard model and the standard way to do this is by providing sufficient good and bad counts for each bin to compute reliable WoE. If good or bad counts are not sufficient, as in this case, this approach ends up yielding noisy, choppy WoE distribution across bins, leading to weak-performing scorecard models.

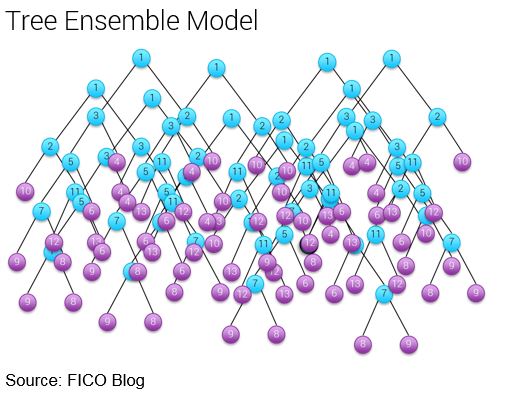

Next, we used a machine learning algorithm called Tree Ensemble Modeling or TEM. TEM involves building multiple “tree” models, where each node of the tree is a variable which is split into two further sub-trees.

Each tree model that we build in TEM is built on a subset of the training dataset, and uses just a handful of characteristics as input. This limits the degrees of freedom of the tree model, yields a shallow tree as a consequence and ensures that the splitting of the variables is limited. This allows us to meet the requirement on the minimum number of good and bad cases more diligently.

The following schematic shows an artistic rendition of a TEM, depicting multiple shallow trees in a group or an Ensemble. The final score output, produced through Ensemble Modeling, is usually an average of the scores of all the constituent tree models in the Ensemble.

Such a model can have thousands of trees and tens of thousands of parameters that have no simple interpretation. Unlike a scorecard, you can’t tell a borrower, a regulator or even a risk analyst why someone scored the way they did. This inability to explain the reason why someone got a particular score is a big limitation of an approach like TEM.

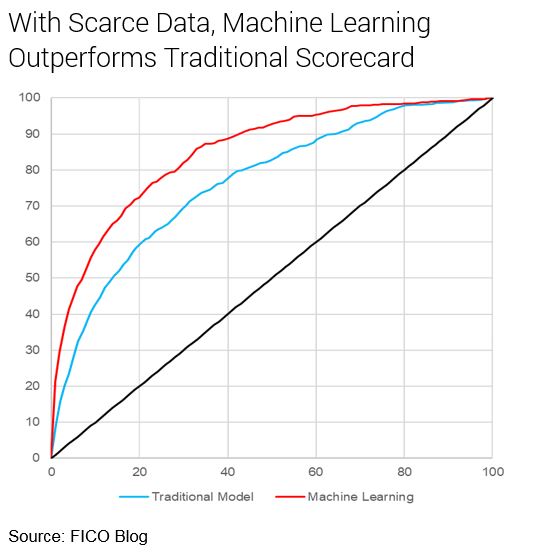

However, by building a machine learning model, we were able to confirm that our scorecard approach was losing a significant amount of predictive power. Although not practical for use, the machine learning score outperformed the scorecard. Our next challenge was to try and narrow the performance gap between the TEM and the scorecard models.

Scorecards Mimicking Machine Learning

FICO has faced this challenge many times before: where there is a demand for a scorecard model even though a machine learning model provides a much more powerful decisioning capability: How do you retain the deep insights of machine learning and AI, which can uncover patterns scorecard development approaches can’t and impart that knowledge to a scorecard model?

Over the years we have developed practical ways to address the previously identified limitations of machine learning models that hitherto didn’t allow them to be applied in regulated decision scenarios, such as lending and credit risk decisioning. For instance, we have developed mechanisms for imputing domain knowledge and explainability into neural networks and other machine learning models. We have also developed methodologies to apply constraints on the relationships between input and output of machine learning models, as well as engineer those relationships much like in case of scorecards.

Still there are situations where some businesses are hesitant in using machine learning models directly. In such cases, we recommend leveraging a methodology called Teacher-Student learning. In this approach a machine learning model is first trained which is capable of learning complex non-linear relationships in the data. Such a model is called a “Teacher” model. The TEM machine learning model that we discussed earlier can act as the Teacher model.

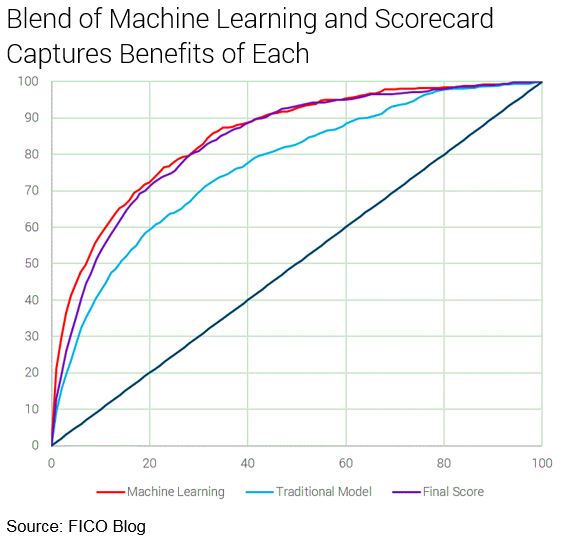

We then train a “Student” model which is a set of segmented scorecards, which recodes the patterns and insights discovered using the Teacher machine learning model. We aim to match the score distribution generated by the Teacher model, instead of relying on the WoE approach that we discussed earlier. So instead of providing good and bad data points and directly computing the WoE, the score distribution in each bin derived from the machine learning Teacher model ends up providing an estimate of WoE.

Significantly, the final model is almost as predictive as the Teacher machine learning model. An out-of-time validation of the final model demonstrates that it performs well over a period of time, as shown in the following figure.

The final result of this approach is a strong, palatable segmented scorecard model. Our hybrid approach overcame the limitations imposed by the fewer number of bad cases. Whereas previously it was considered impossible to build powerful scorecards for problem spaces with such sparse bad cases, the Teacher-Student approach allows us to do so, whenever a machine learning algorithm can be built to extract more signal from such datasets.

It is worth noting that another machine learning technique, such as a neural network model, would work as effectively as TEM. Thus, the approach described above is not limited by the choice of machine learning architecture, if the machine learning architecture is designed to extract more signal from the training data than the scorecard model.

This is just one approach that FICO data science teams use to leverage the power of AI in heavily regulated areas where reasons related to score loss are needed. It represents our commitment to expanding AI to new areas for our customers, something we have been practicing for more than 30 years.

How FICO Can Help You Build Better Risk Models

- Explore our modelling services

- Read more about Responsible AI

- Download our Playbook for Responsible AI

This is an update of a post from 2017.

Popular Posts

Average U.S. FICO Score at 717 as More Consumers Face Financial Headwinds

Outlier or Start of a New Credit Score Trend?

Read more

Average U.S. FICO® Score at 716, Indicating Improvement in Consumer Credit Behaviors Despite Pandemic

The FICO Score is a broad-based, independent standard measure of credit risk

Read more

Average U.S. FICO® Score stays at 717 even as consumers are faced with economic uncertainty

Inflation fatigue puts more borrowers under financial pressure

Read moreTake the next step

Connect with FICO for answers to all your product and solution questions. Interested in becoming a business partner? Contact us to learn more. We look forward to hearing from you.