Deep Dive: How to Make "Black Box" Neural Networks Explainable

Neural networks are a powerful form of machine learning, but their black box nature often defies explainability. We have found a solution to this problem.

Neural networks can significantly boost the arsenal of analytic tools companies use to solve their biggest business challenges. But countless organizations hesitate to deploy machine learning algorithms with a “black box” appearance; while their mathematical equations are often straightforward, deriving a human-understandable interpretation is often difficult. The result is that even machine learning models with potential business value may remain inexplicable — a quality incompatible with regulated industries — and thus often are not deployed into production.

To overcome this challenge, FICO has developed a patent-pending machine learning technique called Interpretable Latent Features; it brings explainability to the forefront, and is derived from our three decades of experience in using neural networks to solve business problems.

An Introduction to Explainability

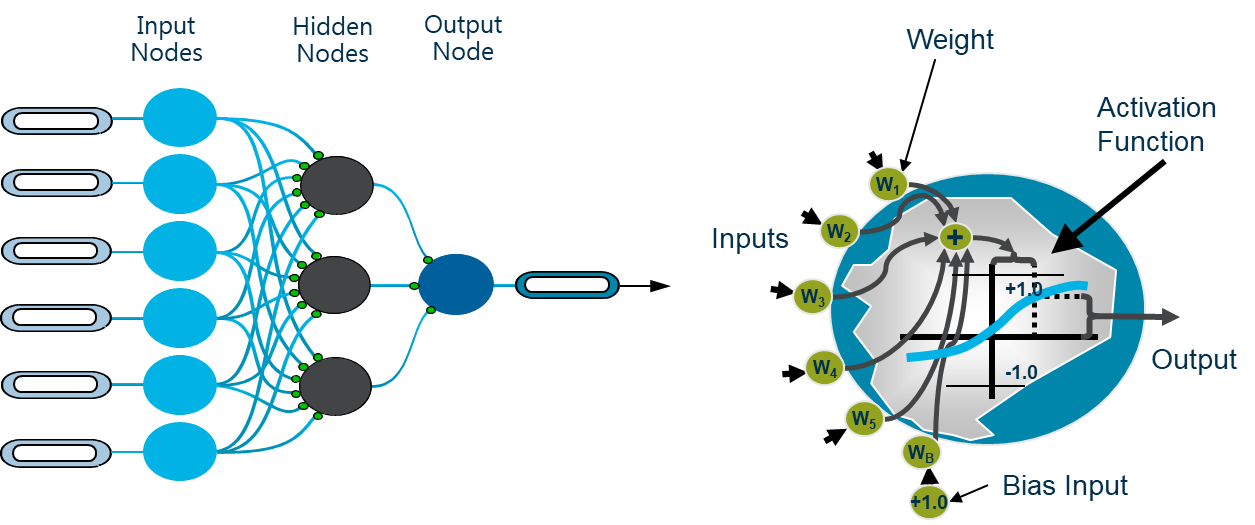

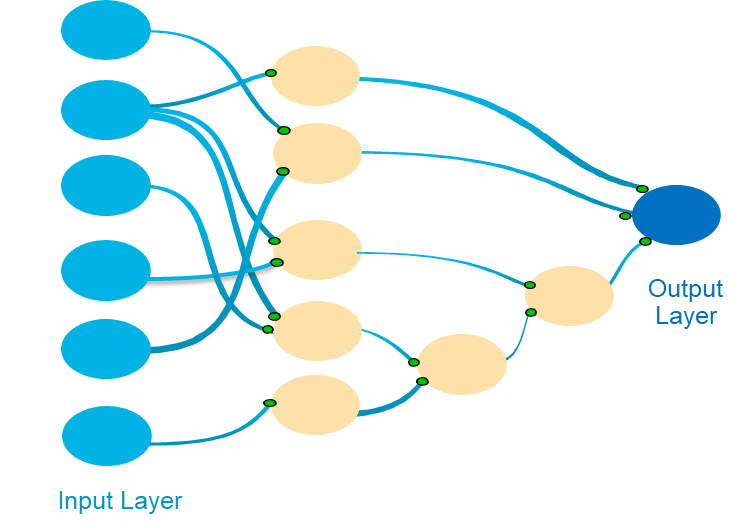

A neural network model has a complex structure, making even the simplest neural net with a single hidden layer hard to understand (Figure 1). FICO’s methodology expands the driving features of the specification of each hidden node. This leads to an explainable neural network; the transformations in the hidden nodes make the behavior of the neural network easily understandable by human analysts at companies and regulatory agencies, thus speeding the path to production.

Generating this model leads to the learning of a set of interpretable latent features: non-linear transformations of a single input variable, or interaction of two or more of them together. The interpretability threshold of the nodes is the upper threshold on the number of inputs allowed in a single hidden node.

Figure 1: Neural Network and Node Structure

Source: FICO Blog

Explaining Interpretability in a Cost Function

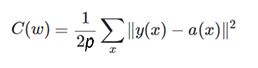

Neural networks are trained using back-propagation algorithms. In the example below, a cost function (a mean of squared errors) is minimized.

The back-propagation algorithm takes the derivative of this cost function to adjust the model weights. Adding a penalty term by way of sum of absolute weights has the impact of converging smaller weights to zero (and often the majority).

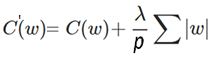

As a consequence, the hidden nodes get simplified. In Figure 2, hidden node LF1 is a non-linear transformation of input variable v2, and LF2 is an interaction of two input variables, v1 and v5. These nodes are considered resolved because the number of inputs is below or equal to the interpretability threshold of 2 in this example. On the other hand, the node LF3 is considered unresolved.

Figure 2: Neural Network with One Unresolved Node

Resolution Is an Iterative Process

To resolve an unresolved node, we tap into its activation. A new neural network model is then trained; the input variables of that hidden node become the predictors for the new neural network, and the hidden node activation is the target.

With the penalty term applied to the cost function, this process expresses the unresolved node in terms of another layer of hidden nodes, some of which are resolved. Applying this approach iteratively to all the unresolved nodes leads to a deep neural network with an unusual architecture, in which each node is resolved and therefore is interpretable.

Figure 3: Neural Network with Resolved Nodes

Source: FICO Blog

Interpretable Latent Features at Work

An explainable multi-layered neural network can be easily understood by an analyst, a business manager and a regulator. These machine learning models can be directly deployed due to their increased transparency. Alternatively, analytic teams can take the latent features learned and incorporate them into current model architectures (such as scorecards).

The advantage of leveraging this machine learning technique is twofold:

- It doesn’t change any of the established workflows for creating and deploying traditional models.

- It improves the models’ performance by suggesting new features to be used in the “traditional rails.”

This library, which is capable of taking an input dataset and learning the interpretable latent features, has been deployed as a library in the FICO® Platform - Analytics Workbench. It can:

- Export an enhanced dataset that contains the newly learned latent features, along with the original fields.

- Provide a list of definitions of each of the newly learned latent features. This dataset can be used to enhance traditional models like the scorecard models used in risk management.

To further exploit the power of latent feature interpretability, the learned explainable neural network model can be exported and deployed inside the FICO® Decision Management Platform, providing an end-to-end model development and execution capability. In this way, FICO is helping our customers to deconstruct any lingering “black box” connotations of machine learning and neural networks by unleashing the power of interpretable latent features.

Stay current with my latest analytic thinking and my race to achieve 100 authored patent applications. Follow me on Twitter @ScottZoldi.

Popular Posts

Average U.S. FICO Score at 717 as More Consumers Face Financial Headwinds

Outlier or Start of a New Credit Score Trend?

Read more

Average U.S. FICO® Score at 716, Indicating Improvement in Consumer Credit Behaviors Despite Pandemic

The FICO Score is a broad-based, independent standard measure of credit risk

Read more

Average U.S. FICO® Score stays at 717 even as consumers are faced with economic uncertainty

Inflation fatigue puts more borrowers under financial pressure

Read moreTake the next step

Connect with FICO for answers to all your product and solution questions. Interested in becoming a business partner? Contact us to learn more. We look forward to hearing from you.