A Moment of Science: Polyglot Everything

By Josh Hemann Last month, I wrote about the commoditization of analytics. Actually, what I wrote was that although there were some trends in this area, I do not think it will go…

By Josh Hemann

Last month, I wrote about the commoditization of analytics. Actually, what I wrote was that although there were some trends in this area, I do not think it will go far because of the increasingly eclectic nature of tools and skills needed to work with Big Data. In this post, I want to go a little deeper into what I was referring to by “eclectic”…

Polyglotism

If you can speak several languages fluently, you are a polyglot. I am not a polyglot, but much of the world’s population is. For example, people in Sudan might speak Arabic in a public setting, English when at a government office, and a tribal language when with family.

Polyglotism exists in the domain of software development as well. I would argue that like human language, much of the world’s analytic programmers will need to be software language polyglots, if they are not already.

This notion of polyglot programming is not new. Neal Ford seems to have coined the phrase in 2006, and the idea has been popularized in recent years with respect to polyglot persistence. The idea is that with increasing instrumentation and digitization of life, multiple types of databases are needed to store (persist) information and use it effectively.

Of course, multiple databases have been used in business settings for years. In my experience working with retailers, it is almost certain that there is a mix of Oracle, Microsoft SQL Server, and IBM DB2 in use over different parts of operations. There is some polyglotism here in that SQL Server has query functionality that is distinct from Oracle.

But this type of polyglotism-by-accident is very different from purposefully and carefully choosing different databases for different parts of operational needs. These days, the area where a lot of purpose is needed is with respect to how NoSQL databases might be included in the mix. Key-value stores, document databases, graph databases, and scientific databases, for example, all make compromises with traditional database requirements (i.e., ACID) in exchange for flexibility and scalability.

Running NoSQL databases alongside traditional relational databases management systems (RDBMS) is increasingly common, especially in web-focused companies where there are large volumes of structured and unstructured data. And here is a key reality to understand: it is not as if these companies can afford to have a team of SQL developers at one end of the hall and a team of NoSQL developers at the other end. The same developers must often times be fluent in RDBMSs and SQL, as well as in data models, query engines, and query semantics found in NoSQL flavors that are completely different.

This is a concrete example of what polyglot persistence looks like: writing queries on relational data in a functional language (i.e., SQL) using well-established tools, as well as writing queries on hierarchical and unstructured data using imperative languages (e.g., Javascript) using more specialized tools (e.g., Hadoop). All in the same day.

Why polyglotism matters

Travis Oliphant (a demi-God in the Python scientific computing community) once told me something that has really stuck: shared abstractions are powerful. To add some color here, we rely on abstractions every day in our analytical work. For example, we use higher-level programming languages that allow us to focus on what we want to do, rather than on low-level machine instructions for how to change memory state. The SQL language is an abstraction for how to execute relational algebra on stored data. After being in use for more than three decades, no one can argue that this abstraction is widely shared and powerful. (For an interesting read on the database language that lost out, see here.)

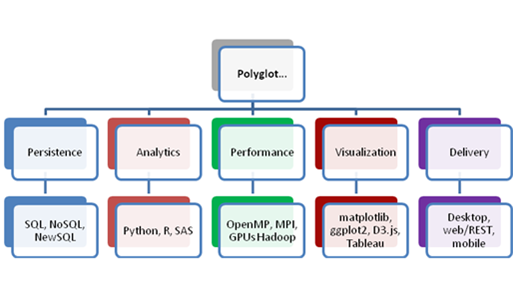

A couple of weeks ago, I was honored to speak at the PyData NYC 2012 conference, and one topic I touched on in my talk was this idea of polyglot everything:

No matter what the setting, I find myself having to mentally switch between abstractions throughout the day. Sometimes this is personally rewarding, since I feel like I am learning and seeing new ways of tackling old problems.

Other times, though, it is exhausting. Each time I switch between an abstraction (e.g., MongoDB and Vertica; matplotlib and ggplot2), there is a bit of a cost (my wife would say a large cost, since I am incapable of multitasking).

Such is life in the current age of Big Data, and I don’t see this getting better any time soon. In coming posts, I’ll dive into what polyglotism looks like in some of these other verticals, how it affects business analytics, and where the new shared abstractions seem to be converging.

Popular Posts

Business and IT Alignment is Critical to Your AI Success

These are the five pillars that can unite business and IT goals and convert artificial intelligence into measurable value — fast

Read more

Average U.S. FICO Score at 717 as More Consumers Face Financial Headwinds

Outlier or Start of a New Credit Score Trend?

Read more

Average U.S. FICO® Score at 716, Indicating Improvement in Consumer Credit Behaviors Despite Pandemic

The FICO Score is a broad-based, independent standard measure of credit risk

Read moreTake the next step

Connect with FICO for answers to all your product and solution questions. Interested in becoming a business partner? Contact us to learn more. We look forward to hearing from you.