Enumeration

In the previous scheduling examples we have used the default enumeration for scheduling problems, invoked by the optimization function cp_schedule. The built-in search strategies used by the solver in this case are particularly suited if we wish to minimize the completion time of a schedule. With other objectives the built-in strategies may not be a good choice, especially if the model contains decision variables that are not part of scheduling objects (the built-in strategies always enumerate first the scheduling objects) or if we wish to maximize an objective. Xpress Kalis makes it therefore possible to use the standard optimization functions cp_minimize and cp_maximize in models that contain scheduling objects, the default search strategies employed by these optimization functions being different from the scheduling-specific ones. In addition, the user may also define his own problem-specific enumeration as shown in the following examples.

User-defined enumeration strategies for scheduling problems may take two different forms: variable-based and task-based. The former case is the subject of Chapter Enumeration, and we only give a small example here (Section Variable-based enumeration). The latter will be explained with some more detail by the means of a job-shop scheduling example.

Variable-based enumeration

When studying the problem solving statistics for the bin packing problem in Section Cumulative scheduling: discrete resources we find that the enumeration using the default scheduling search strategies requires several hundred nodes to prove optimality. With a problem-specific search strategy we may be able to do better! Indeed, we shall see that a greedy-type strategy, assigning the largest files first, to the first disk with sufficient space is clearly more efficient.

Using cp_minimize

The only decisions to make in this problem are the assignments of files to disks, that is, choosing a value for the start time variables of the tasks. The following lines of Mosel code order the tasks in decreasing order of file sizes and define an enumeration strategy for their start times assigning each the smallest possible disk number first. Notice that the sorting subroutine qsort is defined by the module mmsystem that needs to be loaded with a uses statement at the beginning of the model.

declarations

Strategy: array(range) of cpbranching

FSORT: array(FILES) of integer

end-declarations

qsort(SYS_DOWN, SIZE, FSORT) ! Sort files in decreasing order of size

forall(f in FILES)

Strategy(f):=assign_var(KALIS_SMALLEST_MIN, KALIS_MIN_TO_MAX,

{getstart(file(FSORT(f)))})

cp_set_branching(Strategy)

if not cp_minimize(diskuse) then writeln("Problem infeasible"); end-if The choice of the variable selection criterion (first argument of assign_var) is not really important here since every strategy Strategyf only applies to a single variable and hence no selection takes place. Equivalently, we may have written

declarations

Strategy: cpbranching

FSORT: array(FILES) of integer

LS: cpvarlist

end-declarations

qsort(SYS_DOWN, SIZE, FSORT) ! Sort files in decreasing order of size

forall(f in FILES)

LS+=getstart(file(FSORT(f)))

Strategy:=assign_var(KALIS_INPUT_ORDER, KALIS_MIN_TO_MAX, LS)

cp_set_branching(Strategy)

if not cp_minimize(diskuse) then writeln("Problem infeasible"); end-if With this search strategy the first solution found uses 3 disks, that is, we immediately find an optimal solution. The whole search terminates after 17 nodes and takes only a fraction of the time needed by the default scheduling or minimization strategies.

Using cp_schedule

The scheduling search consists of a pretreatment (shaving) phase and two main phases (KALIS_INITIAL_SOLUTION and KALIS_OPTIMAL_SOLUTION) for which the user may specify enumeration strategies by calling cp_set_schedule_search with the corresponding phase selection. The `initial solution' phase aims at providing quickly a good solution whereas the `optimal solution' phase proves optimality. Any search limits such as maximum number of nodes apply separately to each phase, an overall time limit (parameter KALIS_MAX_COMPUTATION_TIME) only applies to the last phase.

The definition of variable-based branching schemes for the scheduling search is done in exactly the same way as what we have seen previously for standard search with cp_minimize or cp_maximize, replacing cp_set_strategy by cp_set_schedule_strategy:

cp_set_schedule_branching(KALIS_INITIAL_SOLUTION, Strategy)

if cp_schedule(diskuse)=0 then writeln("Problem infeasible"); end-if With this search strategy, the optimal solution is found in the 'initial solution' phase after just 8 nodes and the enumeration stops there since the pretreatment phase has proven a lower bound of 3 which is just the value of the optimal solution.

NB: to obtain an output log from the different phases of the scheduling search set the control parameter KALIS_VERBOSE_LEVEL to 2, that is, add the following line to your model before the start of the solution algorithm.

setparam("KALIS_VERBOSE_LEVEL", 2) Task-based enumeration

A task-based enumeration strategy consists in the definition of a selection strategy choosing the task to be enumerated, a value selection heuristic for the task durations, and a value selection heuristic for the task start times.

Consider the typical definition of a job-shop scheduling problem: we are given a set of jobs that each need to be processed in a fixed order by a given set of machines. A machine executes one job at a time. The durations of the production tasks (= processing of a job on a machine) and the sequence of machines per job for a 6×6 instance are shown in Table 66 job-shop instance from . The objective is to minimize the makespan (latest completion time) of the schedule.

| Job | Machines | Durations | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 3 | 1 | 2 | 4 | 6 | 5 | 1 | 3 | 6 | 7 | 3 | 6 |

| 2 | 2 | 3 | 5 | 6 | 1 | 4 | 8 | 5 | 10 | 10 | 10 | 4 |

| 3 | 3 | 4 | 6 | 1 | 2 | 5 | 5 | 4 | 8 | 9 | 1 | 7 |

| 4 | 2 | 1 | 3 | 4 | 5 | 6 | 5 | 5 | 5 | 3 | 8 | 9 |

| 5 | 3 | 2 | 5 | 6 | 1 | 4 | 9 | 3 | 5 | 4 | 3 | 1 |

| 6 | 2 | 4 | 6 | 1 | 5 | 3 | 3 | 3 | 9 | 10 | 4 | 1 |

Model formulation

Let JOBS denote the set of jobs and MACH (MACH = {1,...,NM}) the set of machines. Every job j is produced as a sequence of tasks taskjm where taskjm needs to be finished before taskj,m+1 can start. A task taskjm is processed by the machine RESjm and has a fixed duration DURjm.

The following model formulates the job-shop scheduling problem.

tasks taskjm (j ∈ JOBS, m ∈ MACH)

minimize finish

finish = maximumj ∈ JOBS(taskj,NM.end)

∀ m ∈ MACH: resm.capacity = 1

∀ j ∈ JOBS, m ∈ MACH: taskjm.start, taskjm.end ∈ {0,...,MAXTIME}

∀ j ∈ JOBS, m ∈ {1,...,NM-1}: taskjm.successors = {taskj,m+1}

∀ j ∈ JOBS, m ∈ MACH: taskjm.duration = DURjm

∀ j ∈ JOBS, m ∈ MACH: taskjm.requirementRESjm = 1

Implementation

The following Mosel model implements the job-shop scheduling problem and defines a task-based branching strategy for solving it. We select the task that has the smallest remaining domain for its start variable and enumerate the possible values for this variable starting with the smallest. A task-based branching strategy is defined in Xpress Kalis with the function task_serialize, that takes as arguments the user task selection, value selection strategies for the duration and start variables, and the set of tasks it applies to. Such task-based branching strategies can be combined freely with any variable-based branching strategies.

model "Job shop (CP)"

uses "kalis", "mmsystem"

parameters

DATAFILE = "jobshop.dat"

NJ = 6 ! Number of jobs

NM = 6 ! Number of resources

end-parameters

forward function select_task(tlist: cptasklist): integer

declarations

JOBS = 1..NJ ! Set of jobs

MACH = 1..NM ! Set of resources

RES: array(JOBS,MACH) of integer ! Resource use of tasks

DUR: array(JOBS,MACH) of integer ! Durations of tasks

res: array(MACH) of cpresource ! Resources

task: array(JOBS,MACH) of cptask ! Tasks

end-declarations

initializations from "Data/"+DATAFILE

RES DUR

end-initializations

HORIZON:= sum(j in JOBS, m in MACH) DUR(j,m)

forall(j in JOBS) getend(task(j,NM)) <= HORIZON

! Setting up the resources (capacity 1)

forall(m in MACH)

set_resource_attributes(res(m), KALIS_UNARY_RESOURCE, 1)

! Setting up the tasks (durations, resource used)

forall(j in JOBS, m in MACH)

set_task_attributes(task(j,m), DUR(j,m), res(RES(j,m)))

! Precedence constraints between the tasks of every job

forall (j in JOBS, m in 1..NM-1)

! getstart(task(j,m)) + DUR(j,m) <= getstart(task(j,m+1))

setsuccessors(task(j,m), {task(j,m+1)})

! Branching strategy

Strategy:=task_serialize("select_task", KALIS_MIN_TO_MAX,

KALIS_MIN_TO_MAX,

union(j in JOBS, m in MACH | exists(task(j,m))) {task(j,m)})

cp_set_branching(Strategy)

! Solve the problem

starttime:= gettime

if not cp_minimize(getmakespan) then

writeln("Problem is infeasible")

exit(1)

end-if

! Solution printing

cp_show_stats

write(gettime-starttime, "sec ")

writeln("Total completion time: ", getsol(getmakespan))

forall(j in JOBS) do

write("Job ", strfmt(j,-2))

forall(m in MACH | exists(task(j,m)))

write(strfmt(RES(j,m),3), ":", strfmt(getsol(getstart(task(j,m))),3),

"-", strfmt(getsol(getend(task(j,m))),2))

writeln

end-do

!*******************************************************************

! Task selection for branching

function select_task(tlist: cptasklist): integer

declarations

Tset: set of integer

end-declarations

! Get the number of elements of "tlist"

listsize:= getsize(tlist)

! Set of uninstantiated tasks

forall(i in 1..listsize)

if not is_fixed(getstart(gettask(tlist,i))) then

Tset+= {i}

end-if

returned:= 0

! Get a task with smallest start time domain

smin:= min(j in Tset) getsize(getstart(gettask(tlist,j)))

forall(j in Tset)

if getsize(getstart(gettask(tlist,j))) = smin then

returned:=j; break

end-if

end-function

end-model

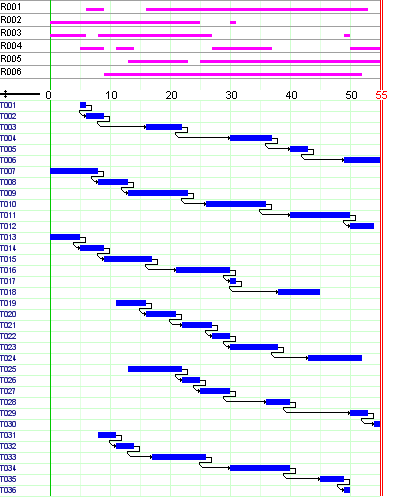

Results

An optimal solution to this problem has a makespan of 55. In comparison with the default scheduling strategy, our branching strategy reduces the number of nodes that are enumerated from over 300 to just 105 nodes with a comparable model execution time (however, for larger instances the default scheduling strategy is likely to outperform our branching strategy). The default minimization strategy does not find any solution for this problem within several minutes running time.

Figure 5.8: Solution graph

Alternative search strategies

Similarly to what we have seen above, we may define a user task selection strategy for the scheduling search. The only modifications required in our model are to replace cp_set_branching by cp_set_schedule_strategy and cp_minimize by cp_schedule. The definition of the user task choice function select_task remains unchanged.

! Branching strategy

Strategy:=task_serialize("select_task", KALIS_MIN_TO_MAX,

KALIS_MIN_TO_MAX,

union(j in JOBS, m in MACH | exists(task(j,m))) {task(j,m)})

cp_set_schedule_strategy(KALIS_INITIAL_SOLUTION, Strategy)

! Solve the problem

if cp_schedule(getmakespan)=0 then

writeln("Problem is infeasible")

exit(1)

end-if

This strategy takes even fewer nodes for completing the enumeration than the standard search with user task selection.

Instead of the user-defined task selection function select_task it is equally possible to use one of the predefined task selection criteria:

- KALIS_SMALLEST_EST / KALIS_LARGEST_EST

- choose task with the smallest/largest lower bound on its start time ( 'earliest start time'),

- KALIS_SMALLEST_LST / KALIS_LARGEST_LST

- choose task with the smallest/largest upper bound on its start time ( 'latest start time'),

- KALIS_SMALLEST_ECT / KALIS_LARGEST_ECT

- choose task with the smallest/largest lower bound on its completion time ( 'earliest completion time'),

- KALIS_SMALLEST_LCT / KALIS_LARGEST_LCT

- choose task with the smallest/largest upper bound on its completion time ( 'latest completion time').

Strategy:=task_serialize(KALIS_SMALLEST_LCT, KALIS_MIN_TO_MAX,

KALIS_MIN_TO_MAX,

union(j in JOBS, m in MACH | exists(task(j,m))) {task(j,m)}) Even more specialized task selection strategies can be defined with the help of group_serialize. In this case you may decide freely which are the variables to enumerate for a task and in which order they are to be considered. The scope of group_serialize is not limited to tasks, you may use this subroutine to define search strategies involving other types of (user) objects.

Choice of the propagation algorithm

The performance of search algorithms for scheduling problems relies not alone on the definition of the enumeration strategy; the choice of the propagation algorithm for resource constraints may also have a noticeable impact. The propagation type is set by an optional last argument of the procedure set_resources_attributes, such as

set_resource_attributes(res, KALIS_DISCRETE_RESOURCE, CAP, ALG)

where ALG is the propagation algorithm choice.

For unary resources we have a choice between the algorithms KALIS_TASK_INTERVALS and KALIS_DISJUNCTIONS. The former achieves stronger pruning at the cost of a larger computational overhead making the choice of KALIS_DISJUNCTIONS more competitive for small to medium sized problems. Taking the example of the jobshop problem from the previous section, when using KALIS_DISJUNCTIONS the default scheduling search is about 4 times faster than with KALIS_TASK_INTERVALS although the number of nodes explored is slightly larger than with the latter.

The propagation algorithm options for cumulative resources are KALIS_TASK_INTERVALS and KALIS_TIMETABLING. The filtering algorithm KALIS_TASK_INTERVALS is stronger and relatively slow making it the preferred choice for hard, medium-sized problems whereas KALIS_TIMETABLING should be given preference for very large problems where the computational overhead of the former may be prohibitive. In terms of an example, the binpacking problem we have worked with in Sections Cumulative scheduling: discrete resources and Variable-based enumeration solves about three times faster with KALIS_TASK_INTERVALS than with KALIS_TIMETABLING (using the default scheduling search).