Basic tasks

Topics covered in this section:

- Executing a submodel

- Stopping the submodel execution

- Output from the submodel

- Compilation to memory

- Runtime parameters

- Running several submodels

- Communication of data between models

- Working with remote Mosel instances

- XPRD: Remote model execution without local installation

This section introduces the basic tasks that typically need to be performed when working with several models in Mosel using the functionality of the module mmjobs.

Executing a submodel

Assume we are given the following simple model testsub.mos (the submodel) that we wish to run from a second model (its parent model):

model "Test submodel" forall(i in 10..20) write(i^2, " ") writeln end-model

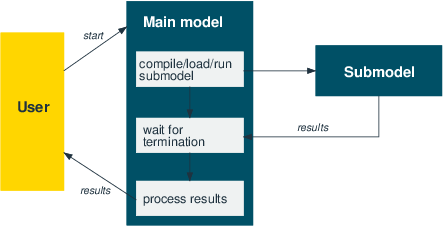

The reader is certainly familiar with the standard compile-load-run sequence that is always required for the execution of a Mosel model independent of the place from where it is executed (be it the Mosel command line, a host application, or another Mosel model). In the case of a Mosel model executing a second model, we need to augment this standard sequence to compile-load-run-wait. The rationale behind this is that the submodel is started as a separate thread and we thus make sure that the submodel has terminated before the parent model ends (or continues its execution with results from the submodel, see Section Communication of data between models below).

Figure 1: Executing a submodel

The following model runtestsub.mos uses the compile-load-run-wait sequence in its basic form to execute the model testsub.mos printed above. The corresponding Mosel subroutines are defined by the module mmjobs that needs to be included with a uses statement. The submodel remains as is (that is, there is no need to include mmjobs if the submodel itself does not use any functionality of this module). The last statement of the controlling (parent) model, dropnextevent, may require some further explanation: the termination of the submodel is indicated by an event (of class EVENT_END) that is sent to the parent model. The wait statement pauses the execution of the parent model until it receives an event. Since in the present case the only event sent by the submodel is this termination message we simply remove the message from the event queue of the controlling model without checking its nature.

model "Run model testsub"

uses "mmjobs"

declarations

modSub: Model

end-declarations

! Compile the model file

if compile("testsub.mos")<>0: exit(1)

load(modSub, "testsub.bim") ! Load the bim file

run(modSub) ! Start model execution

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-model The two models are run by executing the controlling model in any standard way of executing Mosel models (from the Mosel command line, within Xpress Workbench, or from a host application). With the Mosel command line you may use, for instance, the following command:

mosel exec runtestsub.mos

As a result you should see the following output printed by the submodel (see Section Output from the submodel for a discussion of output handling):

100 121 144 169 196 225 256 289 324 361 400

Retrieving events

If we wish to obtain more precise information about the termination status of the submodel we could replace the statement dropnextevent by the following lines that retrieve the event sent by the submodel and print out its class (the termination event has the predefined class EVENT_END) and the value attached to it (here the default value 0). In addition, we display the exit code of the model (value sent by an exit statement terminating the model execution or the default value 0).

declarations

ev: Event

end-declarations

ev:=getnextevent

writeln_("Event class: ", getclass(ev))

writeln_("Event value: ", getvalue(ev))

writeln_("Exit code : ", getexitcode(modSub)) Events also provide information about the sending model, identified via its internal ID, and optionally the user ID and the group ID of the sending model (provided that the corresponding information has previously been defined for this model using setuid or setgid respectively):

writeln_("Event sent by : ", getfromid(ev))

writeln_("Model user ID : ", getfromuid(ev))

writeln_("Model group ID: ", getfromgid(ev) Stopping the submodel execution

If a submodel execution takes a long time it may be desirable to interrupt the submodel without stopping the controlling model itself. The following modified version of our parent model (file runsubwait.mos) shows how this can be achieved by adding a duration (in seconds) to the wait statement. If the submodel has not yet sent the termination event message after executing for one second it is stopped by the call to stop with the model reference.

model "Run model testsub"

uses "mmjobs"

declarations

modSub: Model

end-declarations

! Compile the model file

if compile("testsub.mos")<>0: exit(1)

load(modSub, "testsub.bim") ! Load the bim file

run(modSub) ! Start model execution

wait(1) ! Wait 1 second for an event

if isqueueempty then ! No event has been sent: model still runs

writeln_("Stopping the submodel")

stop(modSub) ! Stop the model

wait ! Wait for model termination

end-if

dropnextevent ! Ignore termination event message

end-model A more precise time measurement can be obtained by retrieving a "model ready" user event from the submodel before we begin to wait for a given duration. With heavy operating system loads the actual submodel start may be delayed, and the event sent by the submodel tells the parent model the exact point of time when its processing is started. Class codes for user events can take any integer value greater than 1 (values 0 and 1 are reserved respectively for the nullevent and the predefined class EVENT_END).

model "Run model testsub"

uses "mmjobs"

declarations

modSub: Model

ev: Event

SUBMODREADY = 2 ! User event class code

end-declarations

! Compile the model file

if compile("testsubev.mos")<>0: exit(1)

load(modSub, "testsubev.bim") ! Load the bim file

run(modSub) ! Start model execution

wait ! Wait for an event

if getclass(getnextevent) <> SUBMODREADY then

writeln_("Problem with submodel run")

exit(1)

end-if

wait(1) ! Let the submodel run for 1 second

if isqueueempty then ! No event has been sent: model still runs

stop(modSub) ! Stop the model

wait ! Wait for model termination

end-if

ev:=getnextevent ! An event is available: model finished

writeln_("Event class: ", getclass(ev))

writeln_("Event value: ", getvalue(ev))

writeln_("Exit code : ", getexitcode(modSub))

end-model The modified submodel testsubev.mos now looks as follows. The user event class must be the same as in the parent model. In this example we are not interested in the value sent with the event and therefore simply leave it at 0.

model "Test submodel (Event)" uses "mmjobs" declarations SUBMODREADY = 2 ! User event class code end-declarations send(SUBMODREADY, 0) ! Send "submodel ready" event forall(i in 10..20) write(i^2, " ") writeln end-model

Output from the submodel

By default, submodels use the same location for their output as their parent model. This implies that, when several (sub)models are run in parallel their output, for example on screen, is likely to mix up. The best way to handle this situation is to redirect the output from each model to a separate file.

Output may be redirected directly within the submodel with statements like the following added to the model before any output is printed:

fopen("testout.txt", F_OUTPUT+F_APPEND) ! Output to file (in append mode)

fopen("tee:testout.txt&", F_OUTPUT) ! Output to file and on screen

fopen("null:", F_OUTPUT) ! Disable all output where the first line redirects the output to the file testout.txt, the second statement maintains the output on screen while writing to the file at the same time, and the third line makes the model entirely silent. The output file is closed by adding the statement fclose(F_OUTPUT) after the printing statements in the model.

The same can be achieved from the parent model by adding output redirection before the run statement for the corresponding submodel ('modSub'), such as:

setdefstream(modSub, F_OUTPUT, "testout.txt") ! Output to file

setdefstream(modSub, F_OUTPUT, "tee:testout.txt&")

! Output to file and on screen

setdefstream(modSub, F_OUTPUT, "null:") ! Disable all output The output redirection for a submodel may be terminated by resetting its output stream to the default output:

setdefstream(modSub, F_OUTPUT, "")

Compilation to memory

The default compilation of a Mosel file filename.mos generates a binary model file filename.bim. To avoid the generation of physical BIM files for submodels we may compile the submodel to memory, as shown in the following example runsubmem.mos. Working in memory usually is more efficient than accessing physical files. Furthermore, this feature will also be helpful if you do not have write access at the place where the parent model is executed.

model "Run model testsub"

uses "mmjobs", "mmsystem"

declarations

modSub: Model

end-declarations

! Compile the model file

if compile("", "testsub.mos", "shmem:testsubbim")<>0 then

exit(1)

end-if

load(modSub, "shmem:testsubbim") ! Load the bim file from memory

fdelete("shmem:testsubbim") ! ... and release the memory block

run(modSub) ! Start model execution

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-model The full version of compile takes three arguments: the compilation flags (e.g., use "g" for debugging), the model file name, and the output file name (here a label prefixed by the name of the shared memory driver). Having loaded the model we may free the memory used by the compiled model with a call to fdelete (this subroutine is provided by the module mmsystem that needs to be loaded in addition to mmjobs).

Runtime parameters

A convenient means of modifying data in a Mosel model when running the model (that is, without having to modify the model itself and recompile it) is to use runtime parameters. Such parameters are declared at the beginning of the model in a parameters block where every parameter is given a default value that will be applied if no other value is specified for this parameter at the model execution.

Consider the following model rtparams.mos that may receive parameters of four different types—integer, real, string, and Boolean—and prints out their values.

model "Runtime parameters" parameters PARAM1 = 0 PARAM2 = 0.5 PARAM3 = '' PARAM4 = false end-parameters writeln_(PARAM1, " ", PARAM2, " ", PARAM3, " ", PARAM4) end-model

The model runrtparam.mos executing this (sub)model may look as follows—all runtime parameters are given new values:

model "Run model rtparams"

uses "mmjobs"

declarations

modPar: Model

end-declarations

! Compile the model file

if compile("rtparams.mos")<>0: exit(1)

load(modPar, "rtparams.bim") ! Load the bim file

! Start model execution

run(modPar, "PARAM1=" + 2 + ",PARAM2=" + 3.4 +

",PARAM3='a string'" + ",PARAM4=" + true)

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-model An alternative formulation to the above makes use of the subroutine setmodpar to define the parameter values for the submodel (notice that we need to load the module mmsystem for the text handling functionality)—after starting the model run we delete the parameters definition; it would also be possible to remove individual parameter value settings by applying resetmodpar to the object params.

model "Run model rtparams 2"

uses "mmjobs", "mmsystem"

declarations

modPar: Model

params: text

end-declarations

! Compile the model file

if compile("rtparams.mos")<>0: exit(1)

load(modPar, "rtparams.bim") ! Load the bim file

setmodpar(params, "PARAM1", 2) ! Set values for model parameters

setmodpar(params, "PARAM2", 3.4)

setmodpar(params, "PARAM3", 'a string')

setmodpar(params, "PARAM4", true)

run(modPar, params) ! Start model execution

params:= "" ! Delete the parameters definition

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-model Running several submodels

Once we have seen how to run a parameterized model from another model it is only a small step to the execution of several different submodel instances from a Mosel model. The following sections deal with the three cases of sequential, fully parallel, and restricted (queued) parallel execution of submodels. For simplicity's sake, the submodels in our examples are all parameterized versions of a single model. It is of course equally possible to compile, load, and run different submodel files from a single parent model.

Sequential submodels

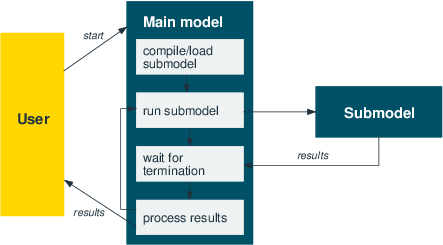

Figure 2: Sequential submodels

Running several instances of a submodel in sequence only requires small modifications to the parent model that we have used for a single model instance as can be seen from the following example (file runrtparamseq.mos)—to keep things simple, we now only reset a single parameter at every execution:

model "Run model rtparams in sequence"

uses "mmjobs"

declarations

A = 1..10

modPar: Model

end-declarations

! Compile the model file

if compile("rtparams.mos")<>0: exit(1)

load(modPar, "rtparams.bim") ! Load the bim file

forall(i in A) do

run(modPar, "PARAM1=" + i) ! Start model execution

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-do

end-model The submodel is compiled and loaded once and after starting the execution of a submodel instance we wait for its termination before the next instance is started.

Parallel submodels

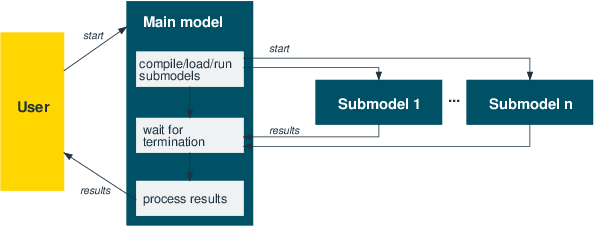

Figure 3: Parallel submodels

The parallel execution of submodel (instances) requires slightly more modifications. We still have to compile the submodel only once, but it now needs to be loaded as many times as we want to run parallel instances. The wait statements are now moved to a separate loop since we first want to start all submodels and then wait for their termination.

model "Run model rtparams in parallel"

uses "mmjobs"

declarations

A = 1..10

modPar: array(A) of Model

end-declarations

! Compile the model file

if compile("rtparams.mos")<>0: exit(1)

forall(i in A) do

load(modPar(i), "rtparams.bim") ! Load the bim file

run(modPar(i), "PARAM1=" + i) ! Start model execution

end-do

forall(i in A) do

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-do

end-model The order in which the submodel output appears on screen is nondeterministic because the models are run in parallel. However, since the submodel execution is very quick, this may not become obvious: try adding the line wait(1) to the submodel rtparams.mos immediately before the writeln statement (you will also need to add the statement uses "mmjobs" at the beginning of the model) and compare the output of several runs of the main model. You are now likely to see different output sequences with every run.

Instead of repeatedly loading the submodel instances from the same BIM file we only really need to do so once and can then produce all further copies as clones of the first submodel—doing so will generally be slightly more efficient in terms of speed and memory usage.

model "Run model rtparams in parallel with cloned submodels"

uses "mmjobs"

declarations

A = 1..10

modPar: array(A) of Model

end-declarations

! Compile the model file

if compile("rtparams.mos")<>0: exit(1)

forall(i in A) do

if i=1 then

load(modPar(i), "rtparams.bim") ! First submodel: Load the bim file

else

load(modPar(i), modPar(1)) ! Clone the already loaded bim

end-if

run(modPar(i), "PARAM1=" + i) ! Start model execution

end-do

forall(i in A) do

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-do

end-model Job queue for managing parallel submodels

The parallel execution of submodels in the previous section starts a large number of models at the same time. With computationally more expensive submodel runs this simple design might not be an appropriate choice: as a general rule, we recommend that the number of concurrent (sub)models should not exceed the number of processors available to avoid any negative impact on performance, and there might also be restrictions on the number of concurrent models imposed by your Xpress licence.

The following example therefore shows how to extend the parent model from the previous section as to limit the number of parallel submodel executions. The model instances to be run are implemented as a job queue, a list of instance indices that is accessed in first-in-first-out order. There are now two sets of indices, the job indices (set A) for the instances we wish to process and the model indices (set RM) corresponding to the concurrently executing Mosel models. We save the model index information as user model ID with each model and use the mapping jobid that relates the job indices to the model indices.

As before, we compile the submodel and load the required number of model instances into Mosel. We then take the first few entries from the job list and start the corresponding model runs. As soon as a model run terminates, a new instance is started through the procedure start_next_job (see below).

The model takes a parameter NUMPAR that lets you change the limit on the number of submodels at run time.

model "Run model rtparams with job queue"

uses "mmjobs"

parameters

NUMPAR=2 ! Number of parallel model executions

end-parameters ! (preferrably <= no. of processors)

forward procedure start_next_job(submod:Model)

declarations

RM = 1..NUMPAR ! Model indices

A = 1..10 ! Job (instance) indices

modPar: array(RM) of Model ! Models

jobid: array(set of integer) of integer ! Job index for model IDs

JobList: list of integer ! List of jobs

JobsRun: set of integer ! Set of finished jobs

JobSize: integer ! Number of jobs to be executed

Msg: Event ! Messages sent by models

end-declarations

! Compile the model file

if compile("rtparams.mos")<>0: exit(1)

forall(m in RM) do

load(modPar(m), "rtparams.bim") ! Load the bim file

modPar(m).uid:= m ! Store the model ID as UID

end-do

JobList:= sum(i in A) [i] ! Define the list of jobs (instances)

JobSize:=JobList.size ! Store the number of jobs

JobsRun:={} ! Set of terminated jobs is empty

!**** Start initial lot of model runs ****

forall(m in RM)

if JobList<>[] then

start_next_job(modPar(m))

end-if

!**** Run all remaining jobs ****

while (JobsRun.size<JobSize) do

wait ! Wait for model termination

Msg:= getnextevent

if getclass(Msg)=EVENT_END then ! We are only interested in "end" events

m:=getfromuid(Msg) ! Retrieve the model UID

JobsRun+={jobid(m)} ! Keep track of job termination

writeln_("End of job ", jobid(m), " (model ", m, ")")

if JobList<>[] then ! Start a new run if queue not empty

start_next_job(modPar(m))

end-if

end-if

end-do

end-model In the while loop above we identify the model that has sent the termination message and add the corresponding job identifier to the set JobsRun of completed instances. If there are any remaining jobs in the list, the procedure start_next_job is called that takes the next job and starts it with the model that has just been released.

procedure start_next_job(submod: Model)

i:=getfirst(JobList) ! Retrieve first job in the list

cuthead(JobList,1) ! Remove first entry from job list

jobid(submod.uid):= i

writeln_("Start job ", i, " (model ", submod.uid, ")")

run(submod, "PARAM1=" + i + ",PARAM2=" + 0.1*i +

",PARAM3='string " + i + "'" + ",PARAM4=" + isodd(i))

end-procedure The job queue in our example is static, in the sense that the list of jobs to be processed is fixed right at the beginning. Using standard Mosel list handling functionality, it can quite easily be turned into a dynamic queue to which new jobs are added while others are already being processed.

Communication of data between models

Runtime parameters are a means of communicating single data values to a submodel but they are not suited, for instance, to pass data tables or sets to a submodel. Also, they cannot be employed to retrieve any information from a submodel or to exchange data between models during their execution. All these tasks are addressed by the two I/O drivers defined by the module mmjobs: the shmem driver and the mempipe driver. As was already stated earlier, the shmem driver is meant for one-to-many communication (one model writing, many reading) and the mempipe driver serves for many-to-one communication. In the case of one model writing and one model reading we may use either, where shmem is conceptionally probably the easier to use.

Using the shared memory driver

With the shmem shared memory driver we write and read data blocks from/to memory. The use of this driver is quite similar to the way we would work with physical files. We have already encountered an example of its use in Section Compilation to memory: the filename is replaced by a label, prefixed by the name of the driver, such as "mmjobs.shmem:aLabel". If the module mmjobs is loaded by the model (or another model held in memory at the same time, such as its parent model) we may use the short form "shmem:aLabel". Another case where we would have to explicitly add the name of a module to a driver occurs when we need to distinguish between several shmem drivers defined by different modules.

The exchange of data between different models is carried out through initializations blocks. In general, the shmem driver will be combined with the bin driver to save data in binary format (an alternative, somewhat more restrictive binary format is generated by the raw driver).

Let us now take a look at a modified version testsubshm.mos of our initial test submodel (Section Executing a submodel). This model reads in the index range from memory and writes back the resulting array to memory:

model "Test submodel" declarations A: range B: array(A) of real end-declarations initializations from "bin:shmem:indata" A end-initializations forall(i in A) B(i):= i^2 initializations to "bin:shmem:resdata" B end-initializations end-model

The model runsubshm.mos to run this submodel may look as follows:

model "Run model testsubshm"

uses "mmjobs"

declarations

modSub: Model

A = 30..40

B: array(A) of real

end-declarations

! Compile the model file

if compile("testsubshm.mos")<>0: exit(1)

load(modSub, "testsubshm.bim") ! Load the bim file

initializations to "bin:shmem:indata"

A

end-initializations

run(modSub) ! Start model execution

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

initializations from "bin:shmem:resdata"

B

end-initializations

writeln(B)

end-model Before the submodel run is started the index range is written to memory and after its end we retrieve the result array to print it out from the parent model.

If memory blocks are no longer used it is recommended to free up these blocks by calling fdelete (subroutine provided by module mmsystem), especially if the data blocks are large, since the data blocks survive the termination of the model that has created them, even if the model is unloaded explicitly, until the module mmjobs is unloaded (explicitly or by the termination of the Mosel session). At the end of our parent model we might thus add the lines

fdelete("shmem:A")

fdelete("shmem:B") Using the memory pipe driver

The memory pipe I/O driver mempipe works in the opposite way to what we have seen for the shared memory driver: a pipe first needs to be opened before it can be written to. That means we need to call initializations from before initializations to. The submodel (file testsubpip.mos) now looks as follows:

model "Test submodel" declarations A: range B: array(A) of real end-declarations initializations from "mempipe:indata" A end-initializations forall(i in A) B(i):= i^2 initializations to "mempipe:resdata" B end-initializations end-model

This is indeed not very different from the previous submodel. However, there are more changes to the parent model (file runsubpip.mos): the input data is now written by this model after the submodel run has started. The parent model then opens a new pipe to read the result data before the submodel terminates its execution.

model "Run model testsubpip"

uses "mmjobs"

declarations

modSub: Model

A = 30..40

B: array(A) of real

end-declarations

! Compile the model file

if compile("testsubpip.mos")<>0: exit(1)

load(modSub, "testsubpip.bim") ! Load the bim file

run(modSub) ! Start model execution

initializations to "mempipe:indata"

A

end-initializations

initializations from "mempipe:resdata"

B

end-initializations

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

writeln(B)

end-model Once a file has opened a pipe for reading it remains blocked in this state until it has received the requested data through this pipe. The program control flow is therefore the following in the present case:

- The controlling (parent) model starts the submodel.

- The submodel opens the input data pipe and waits for its parent to write to it.

- Once the input data has been communicated the submodel continues its execution while the parent model opens the result data pipe and waits for the results.

- When the result data pipe is open, the submodel writes to the result data pipe and then terminates.

- The controlling model prints out the result.

Shared data structures for cloned models

For the special case of submodels that are a clone of the controlling model itself (that is, the load for the submodel instance duplicates the parent model in the same Mosel instance instead of reading in another BIM file) it is possible to declare shared data structures that are immediately accessible for all clones without any copying via initializations from/to. Note that only arrays, lists and sets of basic types (integer, real, boolean, string) can be shared between cloned models and the structure of the data cannot be changed while they are shared.

- It is possible to assign new values to existing entries of an array, but it is not possible to add or remove entries from an array.

- Sets and lists cannot be modified while being shared.

If we wish to work with this functionality for the small example from the previous section, we need to implement the parent and submodel in a single file runsubclone.mos as shown below.

model "Sharing data between clones"

uses "mmjobs"

declarations

modSub: Model

A = 30..40 ! Constant sets are shared

B: shared array(A) of real ! Shared array structure

end-declarations

if getparam("sharingstatus")<=0 then

! **** In main model ****

writeln_("In main: ", B) ! *** Output: In main: [0,...0]

load(modSub) ! Clone the current model

run(modSub) ! Run the submodel...

waitforend(modSub) ! ...and wait for its end

writeln_("After sub: ", B) ! *** Output: After sub: [900,...1600]

else

! **** In submodel ****

writeln_("In sub: ", B) ! *** Output: In sub: [0,...0]

forall(i in A) B(i):= i^2 ! Modify the shared data

end-if

end-model Working with remote Mosel instances

The remote execution of submodels only requires few additions to models running submodels on a single Mosel instance. The examples in this section show how to extend a selection of the models we have seen so far with distributed computing functionality. Before you access Mosel on a remote host, or an additional local instance of Mosel, you need to start up the Mosel server (on the node you wish to use) with the command

xprmsrv

This command may take some options, such as the verbosity level, and the TCP port to be used by connections. The options are documented in the section on mmjobs in the Mosel Language Reference Manual, and you can also display a list with

xprmsrv -h

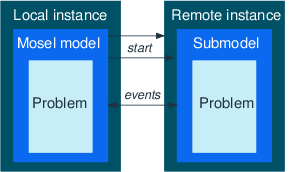

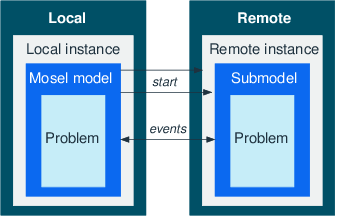

Figure 4: Remote submodel execution

Executing a submodel remotely

To execute a model remotely, we first need to create a new Mosel instance on the remote node. In this connect statement, the string that identifies the remote machine may be its local name, an IP address, or the empty string for the current node. In the example runrtdistr.mos below, we compile the submodel locally and after connecting, load the BIM file into the remote instance (notice the use of the rmt I/O driver to specify that the BIM is located on the root node). The load subroutine now takes an additional first argument indicating the Mosel instance we want to use. All else, including the run statement and the handling of events remain the same as in the single instance version of this model from Section Runtime parameters.

model "Run model rtparams remotely"

uses "mmjobs"

declarations

modPar: Model

mosInst: Mosel

end-declarations

! Compile the model file

if compile("rtparams.mos")<>0: exit(1)

NODENAME:= "" ! "" for current node, or name, or IP address

! Open connection to a remote node

if connect(mosInst, NODENAME)<>0: exit(2)

! Load the bim file

load(mosInst, modPar, "rmt:rtparams.bim")

! Start model execution + parameter settings

run(modPar, "PARAM1=" + 2 + ",PARAM2=" + 3.4 +

",PARAM3='a string'" + ",PARAM4=" + true)

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-model Alternatively to the above, we might have compiled the submodel remotely after having established the connection, for instance using this code (the rmt prefix now appears in the compilation since we assume that the submodel is located at the same place as its parent model).

if connect(mosInst, NODENAME)<>0: exit(2) if compile(mosInst, "", "rmt:rtparams.mos", "rtparams.bim")<>0 then exit(1); end-if load(mosInst, modPar, "rtparams.bim")

Compiling the submodel on the original (root) node is preferable if we wish to execute several model instances on different remote nodes, and it may also be necessary if write access is not permitted on the remote node.

By default, the rmt driver refers to the root node. It is also possible to prefix the filename by its node number enclosed in square brackets, the special node number -1 denotes the immediate parent node, such as in

load(mosInst, modPar, "rmt:[-1]rtparams.bim")

Note: In this example, we assume that we know of a specific Mosel instance that we wish to connect to. The example model in Section Finding available Mosel servers shows how to search for available xprmsrv servers on the local network.

Parallel submodels in distributed architecture

The following model runrtpardistr.mos is an extension of the example from Section Parallel submodels. The 10 model instances to be run are now distributed over 5 Mosel instances, assigning 2 models per instance.

model "Run model rtparams in distributed architecture"

uses "mmjobs", "mmsystem"

declarations

A = 1..10

B = 1..5

modPar: array(A) of Model

moselInst: array(B) of Mosel

NODENAMES: array(B) of string

end-declarations

!!! Select the (remote) machines to be used:

!!! Use names, IP addresses, or empty string

!!! for the node running this model

forall(i in B) NODENAMES(i):= ""

! Compile the model file locally on root

if compile("rtparams3.mos")<>0: exit(1)

instct:=0

forall(i in A) do

if isodd(i) then

instct+=1 ! Connect to a remote machine

if connect(moselInst(instct), NODENAMES(instct))<>0: exit(2)

end-if

writeln_("Current node: ", getsysinfo(SYS_NODE),

" submodel node: ", getsysinfo(moselInst(instct), SYS_NODE))

! Load the bim file (located at root node)

load(moselInst(instct), modPar(i), "rmt:rtparams3.bim")

! Start remote model execution

run(modPar(i), "PARAM1=" + i + ",PARAM2=" + 0.1*i +

",PARAM3='string " + i + "'" + ",PARAM4=" + isodd(i))

end-do

forall(i in A) do

wait ! Wait for model termination

dropnextevent ! Ignore termination event message

end-do

end-model We work here with a slightly modified version rtparams3.mos of the submodel that has some added reporting functionality, displaying node and model numbers and the system name of the node.

model "Runtime parameters"

uses "mmjobs", "mmsystem"

parameters

PARAM1 = 0

PARAM2 = 0.5

PARAM3 = ''

PARAM4 = false

end-parameters

writeln_("Node: ", getparam("NODENUMBER"), " ", getsysinfo(SYS_NODE),

". Parent: ", getparam("PARENTNUMBER"),

". Model number: ", getparam("JOBID"),

". Parameters: ",

PARAM1, " ", PARAM2, " ", PARAM3, " ", PARAM4)

end-model Job queue for parallel execution in a distributed architecture

Let us now take a look at how we can extend the job queue example from Section Job queue for managing parallel submodels to the case of multiple Mosel instances. We shall work with a single queue (list) of jobs, from which we take the first available job as soon as another model has terminated and we start this new model on the corresponding Mosel instance. Each instance of Mosel processes at most NUMPAR submodels at any time.

model "Run model rtparams with job queue"

uses "mmjobs", "mmsystem"

parameters

J=10 ! Number of jobs to run

NUMPAR=2 ! Number of parallel model executions

end-parameters ! (preferrably <= no. of processors)

forward procedure start_next_job(submod: Model)

declarations

RM: range ! Model indices

JOBS = 1..J ! Job (instance) indices

modPar: array(RM) of Model ! Models

jobid: array(set of integer) of integer ! Job index for model UIDs

JobList: list of integer ! List of jobs

JobsRun: set of integer ! Set of finished jobs

JobSize: integer ! Number of jobs to be executed

Msg: Event ! Messages sent by models

NodeList: list of string !

nodeInst: array(set of string) of Mosel ! Mosel instances on remote nodes

nct: integer

modNode: array(set of integer) of string ! Node used for a model

MaxMod: array(set of string) of integer

end-declarations

! Compile the model file locally

if compile("rtparams.mos")<>0: exit(1)

!**** Setting up remote Mosel instances ****

sethostalias("localhost2","localhost")

NodeList:= ["localhost", "localhost2"]

!!! This list must have at least 1 element.

!!! Use machine names within your local network, IP addresses, or

!!! empty string for the current node running this model.

forall(n in NodeList) MaxMod(n):= NUMPAR

!!! Adapt this setting to number of processors and licences per node

forall(n in NodeList, nct as counter) do

create(nodeInst(n))

if connect(nodeInst(n), n)<>0: exit(1)

if nct>= J then break; end-if ! Stop if started enough instances

end-do

!**** Loading model instances ****

nct:=0

forall(n in NodeList, m in 1..MaxMod(n), nct as counter) do

create(modPar(nct))

load(nodeInst(n), modPar(nct), "rmt:rtparams.bim") ! Load the bim file

modPar(nct).uid:= nct ! Store the model ID as UID

modNode(modPar(nct).uid):= getsysinfo(nodeInst(n), SYS_NODE)

end-do

JobList:= sum(i in JOBS) [i] ! Define the list of jobs (instances)

JobSize:=JobList.size ! Store the number of jobs

JobsRun:={} ! Set of terminated jobs is empty

!**** Start initial lot of model runs ****

forall(m in RM)

if JobList<>[] then

start_next_job(modPar(m))

end-if

!**** Run all remaining jobs ****

while (JobsRun.size<JobSize) do

wait ! Wait for model termination

! Start next job

Msg:= getnextevent

if Msg.class=EVENT_END then ! We are only interested in "end" events

m:= Msg.fromuid ! Retrieve the model UID

JobsRun+={jobid(m)} ! Keep track of job termination

writeln_("End of job ", jobid(m), " (model ", m, ")")

if JobList<>[] then ! Start a new run if queue not empty

start_next_job(modPar(m))

end-if

end-if

end-do

fdelete("rtparams.bim") ! Cleaning up

end-model A new function used by this example is sethostalias: the elements of the list NodeList are used for indexing various arrays and must therefore all be different. If we want to start several Mosel instances on the same machine (here: the local node, designated by localhost) we must make sure that they are addressed with different names. These names can be set up through sethostalias (see the Mosel Language Reference Manual for further detail).

The procedure start_next_job that starts the next job from the queue on remains exactly the same as before (in Section Job queue for managing parallel submodels) and its definition is not repeated here.

Finding available Mosel servers

In the preceding examples we have specified the names of the Mosel instances that we wish to connect to. It is also possible to search for Mosel servers on the local network and hence decide dynamically which remote instances to use for the processing of submodels. This search functionality is provided by the procedure findxsrvs that returns a set of at most M (second argument) instance names in its third argument. The first subroutine argument selects the group number of the servers, further configuration options such as the port number to be used are accessible through control paramters (see the documentation of mmjobs in the Mosel Language Reference for the full list).

model "Find Mosel servers"

uses "mmjobs"

parameters

M = 20 ! Max. number of servers to be sought

end-parameters

declarations

Hosts: set of string

mosInst: Mosel

end-declarations

findxsrvs(1, M, Hosts)

writeln_(Hosts.size, " servers found: ", Hosts)

forall(i in Hosts) ! Establish remote connection and print system info

if connect(mosInst, i)=0 then

writeln_("Server ", i, ": ", getsysinfo(mosInst))

disconnect(mosInst)

else

writeln_("Connection to ", i, " failed ")

end-if

end-model XPRD: Remote model execution without local installation

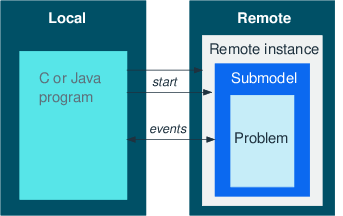

In a distributed Mosel application, the local Mosel (main/parent) model that we have seen in the previous section can be replaced by an XPRD (C or Java) program. The Mosel remote invocation library (XPRD) makes it possible to build applications requiring the Xpress technology that run from environments where Xpress is not installed—including architectures for which Xpress is not available. XPRD is a self-contained library (i.e. with no dependency on the usual Xpress libraries) that provides the necessary routines to start Mosel instances either on the local machine or on remote hosts and control them in a similar way as if they were invoked through the Mosel libraries. Besides the standard instance and model handling operations (connect/disconnect, compile-load-run, stream redirection), the XPRD library supports the file handling mechanisms of mmjobs (transparent file access between instances) as well as its event signaling system (events can be exchanged between the application and running models).

| Mosel | XPRD | ||||

|---|---|---|---|---|---|

|

|

||||

Figure 5: Remote model execution with and without local installation

The following examples show how to replace the controlling Mosel model in the examples from Section Working with remote Mosel instances by a Java program. The Xpress distribution equally includes C versions of these examples in the subdirectory examples/mosel/WhitePapers/MoselPar/XPRD. The Mosel (sub)models remain unchanged from the versions shown in the previous section.

Executing a model remotely

The structure of a Java program for compiling and launching the Mosel model rtparams.mos on a remote Mosel instance remains exactly the same as what we have seen for the Mosel model in Section Executing a submodel remotely, including the use of the rmt driver indicating that the Mosel source is located on a remote machine. The generated BIM file is saved into Mosel's temporary working directory (using the tmp driver).

import com.dashoptimization.*;

import java.lang.*;

import java.io.*;

public class runrtdistr

{

public static void main(String[] args) throws Exception

{

XPRD xprd=new XPRD();

XPRDMosel mosInst=null;

XPRDModel modPar:=null;

// Use the name or IP address of a machine in

// your local network, or "" for current node

String NODENAME = "";

// Open connection to a remote node

mosInst=xprd.connect(NODENAME);

// Compile the model file

mosInst.compile("", "rmt:rtparams.mos", "tmp:rp.bim");

// Load bim file into the remote instance

modPar=mosInst.loadModel("tmp:rp.bim");

// Run-time parameters

modPar.execParams = "PARAM1=" + 2 + ",PARAM2=" + 3.4 +

",PARAM3='a string'" + ",PARAM4=true";

modPar.run(); // Run the model

xprd.waitForEvent(); // Wait for model termination

xprd.dropNextEvent(); // Ignore termination event message

System.out.println("`rtparams' returned: " + modPar.getResult());

mosInst.disconnect(); // Disconnect remote instance

}

} For compiling and running this Java program only the XPRD library is required, the corresponding commands typically look as follows (Windows version, assuming that xprd.jar is located in the current working directory):

javac -cp xprd.jar:. runrtdistr.java java -cp xprd.jar:. runrtdistr

Note: In this example, we assume that we know of a specific Mosel instance that we wish to connect to. The program example in Section Finding available Mosel servers shows how to search for available xprmsrv servers on the local network.

Parallel models in distributed architecture

The following Java program runrtpardistr.java extends the simple example from the previous section to running A=10 model instances on B=5 Mosel instances, assigning 2 models to each instance. All submodels are started concurrently and the Java programm waits for their termination.

import com.dashoptimization.*;

import java.lang.*;

import java.io.*;

public class runrtpardistr

{

static final int A=10;

static final int B=5;

public static void main(String[] args) throws Exception

{

XPRD xprd=new XPRD();

XPRDMosel[] mosInst=new XPRDMosel[B];

XPRDModel[] modPar=new XPRDModel[A];

String[] NODENAMES=new String[B];

int i,j;

// Use the name or IP address of a machine in

// your local network, or "" for current node

for(j=0;j<B;j++) NODENAMES[j] = "localhost";

// Open connection to remote nodes

for(j=0;j<B;j++)

mosInst[j]=xprd.connect(NODENAMES[j]);

for(j=0;j<B;j++)

System.out.println("Submodel node: " +

mosInst[j].getSystemInformation(XPRDMosel.SYS_NODE) + " on " +

mosInst[j].getSystemInformation(XPRDMosel.SYS_NAME));

// Compile the model file on one instance

mosInst[0].compile("", "rmt:rtparams3.mos", "rmt:rp3.bim");

for(i=0;i<A;i++)

{ // Load the bim file into remote instances

modPar[i]=mosInst[i%B].loadModel("rmt:rp3.bim");

// Run-time parameters

modPar[i].execParams = "PARAM1=" + i + ",PARAM2=" + (0.1*i) +

",PARAM3='a string " + i + "',PARAM4=" + (i%2==0);

modPar[i].run(); // Run the model

}

for(i=0;i<A;i++)

{

xprd.waitForEvent(); // Wait for model termination

xprd.dropNextEvent(); // Ignore termination event message

}

for(j=0;j<B;j++)

mosInst[j].disconnect(); // Disconnect remote instance

new File("rp3.bim").delete(); // Cleaning up

}

} Job queue for parallel execution in a distributed architecture

The following Java program implements a job queue for coordinating the execution of a list of model runs on several remote Mosel instances, similarly to the Mosel model in Section Job queue for parallel execution in a distributed architecture. Each Mosel instance processes up to NUMPAR concurrent submodels. The job queue and the list of terminated jobs are represented by List structures. The program closely matches the previous implementation with a controlling Mosel model, including the use of the subroutine startNextJob to retrieve the next job from the queue and to start its processing.

import com.dashoptimization.*;

import java.lang.*;

import java.util.*;

import java.io.*;

public class runrtparqueued

{

static final int J=10; // Number of jobs to run

static final int NUMPAR=2; // Number of parallel model executions

// (preferrably <= no. of processors)

static int[] jobid;

static int[] modid;

static String[] modNode;

public static void main(String[] args) throws Exception

{

XPRD xprd=new XPRD();

// Use the name or IP address of a machine in

// your local network, or "" for current node

String[] NodeList={"localhost","localhost"};

final int nbNodes=(NodeList.length<J?NodeList.length:J);

XPRDMosel[] mosInst=new XPRDMosel[nbNodes];

int[] MaxMod=new int[nbNodes];

XPRDModel[] modPar=new XPRDModel[nbNodes*NUMPAR];

int nct;

List<Integer> JobList=new ArrayList<Integer>();

List<Integer> JobsRun=new ArrayList<Integer>();

int JobSize;

XPRDEvent event;

int lastId=0;

//**** Setting up remote Mosel instances ****

for(int n=0;n<nbNodes;n++)

{

mosInst[n]=xprd.connect(NodeList[n]);

MaxMod[n]= NUMPAR;

// Adapt this setting to number of processors and licences per node

}

// Compile the model file on first node

mosInst[0].compile("", "rmt:rtparams.mos", "rmt:rtparams.bim");

//**** Loading model instances ****

nct=0;

for(int n=0;(n<nbNodes) && (nct<J);n++)

for(int m=0;(m<MaxMod[n]) && (nct<J);m++)

{ // Load the bim file

modPar[nct]=mosInst[n].loadModel("rmt:rtparams.bim");

if(modPar[nct].getNumber()>lastId) lastId=modPar[nct].getNumber();

nct++;

}

jobid=new int[lastId+1];

modid=new int[lastId+1];

modNode=new String[lastId+1];

for(int j=0;j<nct;j++)

{

int i=modPar[j].getNumber();

modid[i]=j; // Store the model ID

modNode[i]=modPar[j].getMosel().getSystemInformation(XPRDMosel.SYS_NODE);

}

for(int i=0;i<J;i++) // Define the list of jobs (instances)

JobList.add(Integer.valueOf(i));

JobSize=JobList.size(); // Store the number of jobs

JobsRun.clear(); // List of terminated jobs is empty

//**** Start initial lot of model runs ****

for(int j=0;j<nct;j++)

startNextJob(JobList,modPar[j]);

//**** Run all remaining jobs ****

while(JobsRun.size()<JobSize)

{

xprd.waitForEvent(); // Wait for model termination

event=xprd.getNextEvent(); // We are only interested in "end" events

if(event.eventClass==XPRDEvent.EVENT_END)

{ // Keep track of job termination

JobsRun.add(Integer.valueOf(jobid[event.sender.getNumber()]));

System.out.println("End of job "+ jobid[event.sender.getNumber()] +

" (model "+ modid[event.sender.getNumber()]+ ")");

if(!JobList.isEmpty()) // Start a new run if queue not empty

startNextJob(JobList,event.sender);

}

}

for(int n=0;n<nbNodes;n++)

mosInst[n].disconnect(); // Terminate remote instances

new File("rtparams.bim").delete(); // Cleaning up

}

//******** Start the next job in a queue ********

static void startNextJob(List<Integer> jobList,XPRDModel model) throws Exception

{

Integer job;

int i;

job=jobList.remove(0); // Retrieve and remove first entry from job list

i=job.intValue();

System.out.println("Start job "+ job + " (model " + modid[model.getNumber()]+

") on "+ modNode[model.getNumber()] );

model.execParams = "PARAM1=" + i + ",PARAM2=" + (0.1*i) +

",PARAM3='a string " + i + "',PARAM4=" + (i%2==0);

model.run();

jobid[model.getNumber()]=i;

}

} Finding available Mosel servers

In the preceding examples we have specified the names of the Mosel instances that we wish to connect to. It is also possible to search for Mosel servers on the local network and hence decide dynamically which remote instances to use for the processing of submodels. This search functionality is provided by the method findXsrvs of class XPRD that returns a set of at most M (second argument) instance names in its third argument. The first argument of this method selects the group number of the servers, further configuration options such as the port number are accessible through control paramters (please refer to the documentation of findXsrvs in the XPRD Javadoc for further detail).

import com.dashoptimization.*;

import java.lang.*;

import java.io.*;

import java.util.*;

public class findservers

{

static final int M=20;

public static void main(String[] args) throws Exception

{

XPRD xprd=new XPRD();

XPRDMosel mosInst=null;

Set<String> Hosts=new HashSet<String>();

xprd.findXsrvs(1, M, Hosts);

System.out.println(Hosts.size() + " servers found.");

for(Iterator<String> h=Hosts.iterator(); h.hasNext();)

{

String i=h.next();

try {

mosInst=xprd.connect(i); // Open connection to a remote node

// Display system information

System.out.println("Server " + i + ": " + mosInst.getSystemInformation());

mosInst.disconnect(); // Disconnect remote instance

}

catch(IOException e) {

System.out.println("Connection to " + i + " failed");

}

}

}

} © 2001-2024 Fair Isaac Corporation. All rights reserved. This documentation is the property of Fair Isaac Corporation (“FICO”). Receipt or possession of this documentation does not convey rights to disclose, reproduce, make derivative works, use, or allow others to use it except solely for internal evaluation purposes to determine whether to purchase a license to the software described in this documentation, or as otherwise set forth in a written software license agreement between you and FICO (or a FICO affiliate). Use of this documentation and the software described in it must conform strictly to the foregoing permitted uses, and no other use is permitted.