GPU-Powered Optimization: FICO's Experience with NVIDIA cuOpt

NVIDIA is making cuOpt available to the open-source community, marking a significant milestone in optimization

In optimization, having the right tool for the right problem can make all the difference. That's why we at FICO are excited that NVIDIA is making cuOpt available to the open-source community. This marks a significant milestone in optimization, opening new opportunities for researchers and industry leaders to explore large-scale problem-solving with GPUs and the NVIDIA GPU-accelerated primal-dual linear programming (PDLP) solver.

GPU-Powered PDLP

Primal-dual linear programming (PDLP) is a powerful addition to the solver landscape, offering speed and memory efficiency that make it an appealing option for certain LP problems. While not a silver bullet, it is shaping up to becoming a game-changer for large-scale optimization. Recognizing its potential, we released our own CPU-based PDLP solver for FICO Xpress in spring 2024, and our research team is actively investigating GPU implementations.

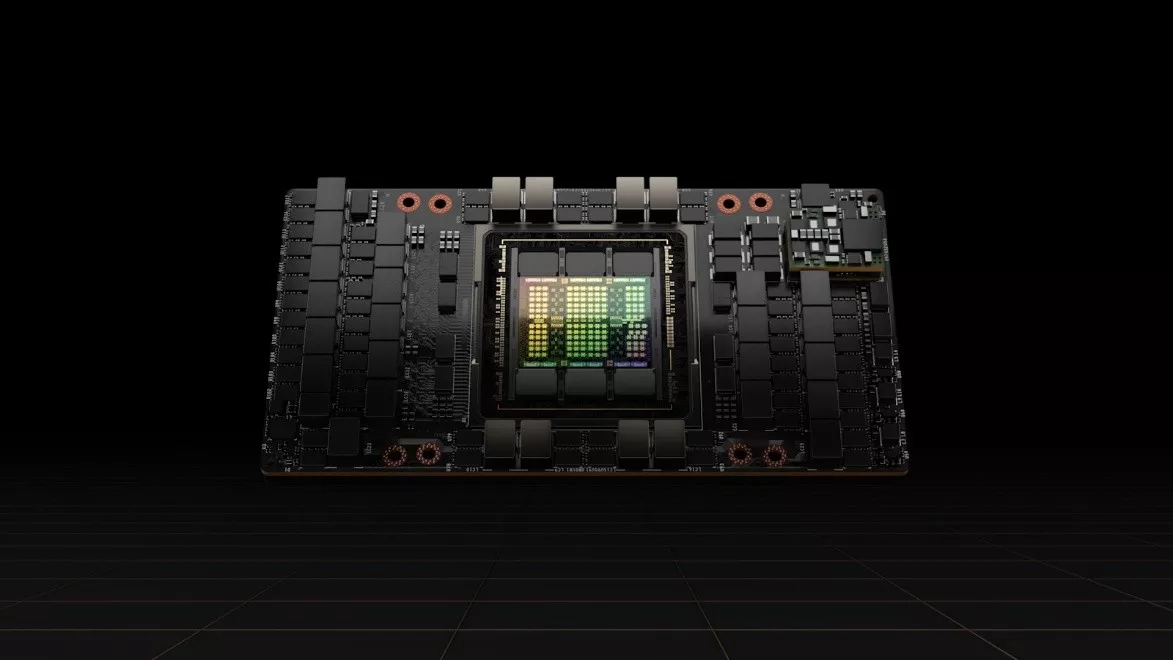

We are particularly excited about NVIDIA progress in this field. On individual instances, we've observed up to 100x speedups with the NVIDIA GPU-based cuOpt solver compared to traditional LP solvers, and 25x speedup compared to our in-house CPU-based PDLP implementation. Of course, direct comparisons should be made carefully—high-end GPUs like NVIDIA H100 GPUs are an entirely different class of hardware compared to CPUs, but the performance gains are still impressive.

However, traditional LP solvers such as those in FICO Xpress remain dominant for most problems, often delivering higher accuracy at scale. PDLP’s speed advantage partly stems from relaxing precision requirements, meaning the gap will shrink if high-accuracy solutions are mandated. Yet, for large-scale problems where high precision isn’t critical, GPU-accelerated PDLP is already proving to be a valuable complement to traditional methods — and this may just be the beginning.

GPU-Based Solvers as a Complement to Traditional Solvers

One of the ongoing challenges in mathematical optimization is balancing computational resources across different solvers. A key advantage of NVIDIA GPU-based PDLP is that it can run alongside traditional CPU-based solvers without competing for CPU resources, with the caveat that certain memory fields will have to be shared. This opens the door to new hybrid approaches.

Another critical breakthrough with cuOpt is ensuring determinism — a key requirement for mathematical optimization. While GPU-based computing often lacks determinism, particularly in sparse operations, NVIDIA has overcome this challenge with cuOpt, making it a reliable addition to enterprise-grade solvers.

The release of cuOPT as an open-source project will allow researchers worldwide to build on the NVIDIA foundation, explore new hybrid optimization approaches, and accelerate progress in GPU-accelerated optimization. With cuOpt open-source availability, the future of large-scale optimization is more accessible than ever. We look forward to seeing how the global research community and industry innovators leverage this new tool to drive the next wave of optimization breakthroughs.

Matt Stanley, VP of Decision Science at FICO, said, “As the optimization landscape continues to evolve, we're eager to see how the industry tackles hardware limitations and improves algorithmic efficiency at higher precision levels. The FICO Xpress team is continuing research in this area and looks forward to having insightful exchanges with NVIDIA to explore the full potential of GPU-powered optimization.”

Read NVIDIA’s post on this topic here.

Discover FICO's Mathematical Optimization Solutions

- Explore FICO Xpress Optimization

- Visit our optimization community

Popular Posts

Business and IT Alignment is Critical to Your AI Success

These are the five pillars that can unite business and IT goals and convert artificial intelligence into measurable value — fast

Read more

Average U.S. FICO Score at 717 as More Consumers Face Financial Headwinds

Outlier or Start of a New Credit Score Trend?

Read more

Average U.S. FICO® Score at 716, Indicating Improvement in Consumer Credit Behaviors Despite Pandemic

The FICO Score is a broad-based, independent standard measure of credit risk

Read moreTake the next step

Connect with FICO for answers to all your product and solution questions. Interested in becoming a business partner? Contact us to learn more. We look forward to hearing from you.