Analytics Model Management: 7 Best Practices to Improve Compliance, Reporting and Performance

These best practices can help you not just meet regulators’ requirements but also increase the value your models add to the business

Why Is Model Management Important?

Analytics models - in particular the predictive models used to forecast customer behavior and other metrics - are a critical part of the banking system, and closely watched by regulators. There's no doubt that increased regulatory scrutiny of predictive models has been a burden on financial institutions. But there is a silver lining: it has brought about a renewed focus on analytic model management. And once an institution has the people, processes and technology in place for properly managing and tracking their models, it can go from merely complying with regulations to evaluating and refining model performance in ways that control losses and boost portfolio profitability. Compliance and performance – it's a win-win.

Of course, all this is easier said than done. Indeed, setting up an effective model management infrastructure remains one of the toughest challenges facing our clients, who often struggle to:

- Manage growing model portfolios. The sheer number of predictive models is increasing rapidly; large lenders may have thousands in production. This presents a mammoth challenge to manage and track them, and ensure they continue to perform well.

- Respond to regulatory requests. Many find it difficult to respond promptly without dragging down productivity of analytic teams.

- Ensure transparency. Models must be easy to understand, defend and explain—to regulators, customers and even your own executives.

- Keep up with documentation. Tracking and reporting may not be glamorous, but they are clearly a necessity for demonstrating compliance and responding rapidly to questions from management and regulators.

The best practices below are good steps toward high-quality model management, spanning across the lifecycle of a model, including development, execution and maintenance.

Model Development

1. Prepare suitable data samples

Data hygiene is critical for both successful model development, validation and ongoing monitoring and will be a key focus during any regulatory. As part of any model development and throughout the lifecycle of the model, you should understand and document the accuracy of data sources, inputs, outputs, transformations and calculations. This should include how you treat outliers and missing values. We recommend validating the reliability and quality of data sources as an earliest step in a model development then yearly, throughout its use.

Equally critical is the development sample design. Careful thought needs to be given to ensure the data sample you create to train your model, reflects the population you intend to use it for, in production, as closely as possible.

Within many banks, segmented models will exist at the same decision or estimation point, to cater for different product or customer requirements. These require the creation of segment specific data samples, for the development of each model. This segmentation requirement is often driven by a material difference in risk characteristics, which can be identified through analytics or is mandated by regulatory requirements, such as the Exposure and Sub-Exposure classes required within Basel regulation.

Automated tools can now make the identification of segmentation characteristics significantly faster and easier. FICO® Platform - Analytics Workbench delivers optimized segmentation to substantially improve a model's precision, while maintaining a transparent and interpretable scoring solution for use with regulators.

Internal model governance policies and the regulators require significant model validation before release into production. This will require the creation of multiple data samples, including:

- Depending on volumes, a random sample of 10% to 20% of eligible cases should be put aside from the development sample, for validation

- A time independent (Out of time) data sample should also be made available

This critical step can inform whether a model is over-fit to training data, and provides a more realistic benchmark for how the model is likely to perform in production.

2. Choose the right model type

Financial institutions should select a model type appropriate for the available data and decision area, and one that will provide robust predictions. While traditional scorecards remain the most common approach for many banks, the drive for greater decision accuracy, means there is increasing exploration of more sophisticated machine learning models, such as XGBoost and Random Forests

Regardless of model type, business and regulatory considerations mean you should consider the following:

- Transparency - Your model type should be easy to understand and explain, both internally as well as externally to regulators and customers. Look for interpretable features that allow you to identify and explain what is driving a score result, as the regulator will require that you give an explanation that is understandable, and defendable, to a customer. FICO Platform - Analytics Workbench helps to make sure that whichever model type you chose, there will be sufficient levels of transparency

- Palatability - Regulators will ask about model outcomes, so it is important that model scores and explanations have a high degree of face validity. Palatability is about intuition and common sense, not complex mathematics. Does your model behave intuitively from a business context and is it directionally correct? For instance, as length of good credit history increases, does the risk score improve?

- Ease of engineering. During development, you may need to engineer or fine-tune a model to ensure it will address your identified business goal. This may require you to substitute or remove predictive characteristics that, while predictive, may cause regulators or customers to raise objections. You may wish to alter variable binnings or apply pattern constraints to mitigate the impact of outlier values, smooth noisy data, and improve the model's robustness.

Bottom line, you’ll want to select a model type that’s sensible to regulators and customers, and can be easily re-engineered when needed. Part of the reason traditional scorecards remain so popular, in risk modeling is that they are highly transparent and interpretable.

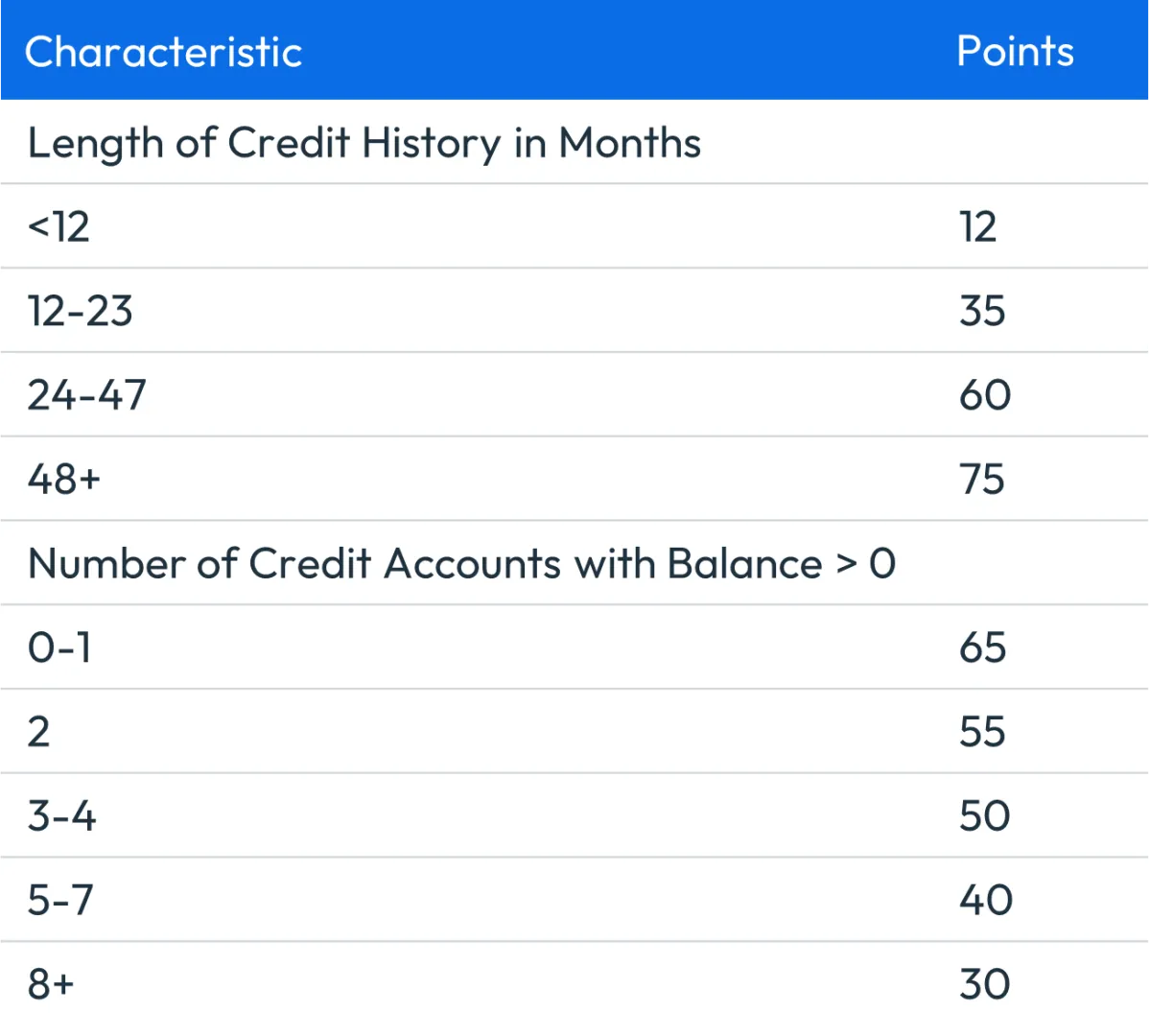

Figure 1: The Inherent Transparency of Traditional Scorecards

Figure 1 illustrates how traditional scorecard provide a clear connection between each factor and an individual's score. This not only enables the model to generate useful reason codes, used in explanations, for consumers; but the information can also be used to describe the model to regulators or answer internal questions.

3. Document thoroughly

With the increased use of predictive models in automated decisioning, regulators worldwide place tremendous importance on documentation and oversight. To answer regulator questions for details of how a particular model was developed, along with who approved the model at key stages of its development, you need the right tools in place to quickly retrieve the supporting evidence.

With that in mind, you should keep an inventory of every model within your operating environment, cataloguing its purpose, usage and restrictions on use. Technical documentation should be captured describing the modeling methodology used, along with supporting evidence for why the final model inputs were chosen. Your documentation should be detailed enough so that anyone unfamiliar with the model can understand how it operates, its limitations and your key assumptions.

Model Execution

4. Deploy into the right systems

Predictive models are often core components of business critical processes, which have a material impact on a firm’s financial performance. As such, it is important to ensure they are deployed within the appropriate technology.

For example, in the UK, the Prudential Regulatory Authority sets clear expectations for how IRB (internal ratings based) firms ensure accuracy, reliability and integrity of models. Within these, there is a clear expectation that firms think about supporting systems and process verification.

The PRA require that the systems into which models are implemented have been thoroughly tested for that purpose and are subject to rigorous quality and change control processes. There should also be ongoing review of the suitability of these systems, with remediation steps taken where deficiencies are observed. In addition, firms must assess whether the systems in place allow the model to work as intended, with data input quality and the accuracy, control and auditing of all calculations being key considerations

With the largest banks around the world executing millions of transactions within business critical processes on FICO Platform, it demonstrably provides a reliably accurate, scalable and governed execution environment.

In addition to this, it also meets another key requirement for governance and explainability – with a full audit history of every change to production models being recorded to support internal governance processes and regulatory requirements.

Model Monitoring and Maintenance

5. Monitor decision overrides

Regulators require careful monitoring and review of decision overrides, especially when the run counter to the score calculated. Consequently, there should be clear guidelines in place to support your underwriters as the regulators will ask questions, such as:

- What is your policy for allowing an override?

- What authority level do you require for override approval?

- How many overrides are you doing every month?

- How do you determine that each type of override is appropriate?

All overrides should be assigned a meaningful override reason code for tracking in order to evaluate an underwriter’s decisions. Use codes that allow for efficient or effective analysis. This is important as overrides can impact revenues and profitability.

High-side overrides (accounts that score above the cutoff but are declined) may be turning away potentially good customers and reducing profitability, whilst low side overrides (those below the cutoff but which are approved) may be taking on too much risk.

In both cases, if underwriting decisions are correct, it should be expected that these accounts will not perform in line with expectation, based on their score — and this should be taken into consideration during model monitoring

6. Follow monitoring guidelines

Customer profiles and the relationships between characteristics and outcomes can change over time, which can lead to a degradation in model performance. Regular validations provide an early indication that a model may benefit from a redevelopment or realignment.

The following are important considerations for model monitoring:

- Maintain development sample inclusion criteria for ongoing model validation samples. The assessment of whether a model still works should be conducted against the assumptions and expectations from its development. If a model performs in line with expectations against the population it was designed for, but not the population it is being applied to, then this is likely a problem with scope creep and not with the model itself.

- Strive for clarity and consistency. Regulators want to see that you validate on a regular cadence, producing a consistent collection of reports, and that your process and results are repeatable. Regulators also want to know that you have a process in place to determine when further scrutiny is warranted, and that you document the results of your investigation, including any actions you are taking (such as more frequent reassessment, recalibration or rebuilding) when a model falls below an identified threshold.

- Create a supervisory review. In the US, the OCC/Fed requires your validation processes be reviewed by parties independent of those developing the model and designing and implementing the validation process. Globally, Basel puts an equally strong emphasis on governance.

- Never validate in a standalone environment. Models and the scores they produce rarely operate in a vacuum; rather, they are intimately tied to business rules and decision strategies. Verifying that a model score rank-orders risk is important. But to truly understand a model’s effectiveness, you must also consider its interactions with your decision strategies. Tools like FICO® Business Outcome Simulator enable impact assessment of any models, in conjunction with your decision rules. When changes are being made, this provides valuable insights into not only decision profile impacts – but the downstream key performance indicators, such as revenue and profit.

7. Assume changes in production will be necessary

Model monitoring is intended to identify any issues with your models in production. There should be clearly document guidelines in place to inform what activities should be taking place to investigate and remediate issues of different severity, such as recalibrations or redevelopments.

Due to the critical role they play, it is vital that updates to your models can be deployed into production quickly but safely upon approval. This should be a key consideration for any systems in place to execute your models; it should be possible to author changes quickly, then fully test and push for approval without waiting months.

There should also be a clear audit history of who changed what, when — and who approved the changes, so there is absolute transparency and control.

FICO Platform provides this clarity, whilst enabling the required change agility; in many cases, the time to production can be reduced by 50%, meaning important model updates do not have to wait.

How FICO Can Help You Manage Model Performance

Popular Posts

Business and IT Alignment is Critical to Your AI Success

These are the five pillars that can unite business and IT goals and convert artificial intelligence into measurable value — fast

Read more

Average U.S. FICO Score at 717 as More Consumers Face Financial Headwinds

Outlier or Start of a New Credit Score Trend?

Read more

Average U.S. FICO® Score at 716, Indicating Improvement in Consumer Credit Behaviors Despite Pandemic

The FICO Score is a broad-based, independent standard measure of credit risk

Read moreTake the next step

Connect with FICO for answers to all your product and solution questions. Interested in becoming a business partner? Contact us to learn more. We look forward to hearing from you.